Projects Last Projects

Faster and Lighter Online Sparse Dictionary Learning

Shay Ben-Assayag & Omer Dahary

Supervised by Jeremias Sulam

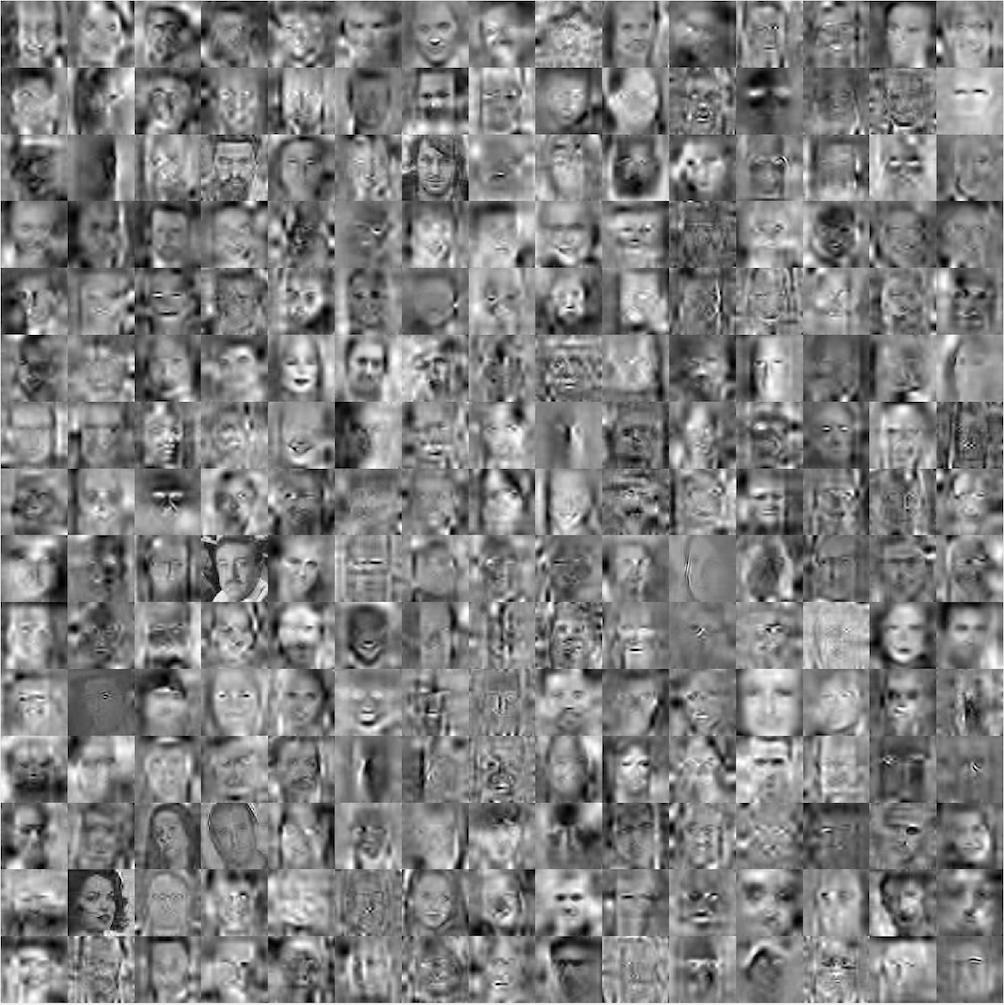

Sparse representation has shown to be a very powerful model for real world signals, and has enabled the development of applications with notable performance. Combined with the ability to learn a dictionary from signal examples, sparsity-inspired algorithms are often achieving state-of-the-art results in a wide variety of tasks. However, most existing methods are restricted to small dimensions, mainly due to the computational constraints that the dictionary learning problem entails. In the context of image processing, this implies handling small image patches instead of the entire image. A novel work which has circumvented this problem is the recently proposed Trainlets framework, where the authors proposed the Online Sparse Dictionary Learning (OSDL) algorithm that is able to efficiently handle bigger dimensions. This approach is based on a double sparsity model which uses a new cropped Wavelet decomposition as the base dictionary, and an adaptive dictionary learned from examples by employing Stochastic Gradient Descent (SGD) ideas.

In continuation to this work, which has shown that dictionary learning can be up-scaled to tackle a new level of signal dimensions, our project is focused on studying and improving OSDL. In this report, we present several modifications to the algorithm which are aimed at dealing with its limitations, results of experiments conducted on high dimensional large datasets, our conclusions and suggestions for future work.

Please, see project report.

Please, see final presentation.

Please, see Code.